Ellyptech Research

Post Quantum Cryptography

In recent years, there has been a substantial amount of research on quantum computers – machines that exploit quantum mechanical phenomena to solve mathematical problems that are difficult or intractable for conventional computers. If large-scale quantum computers are ever built, they will be able to break many of the public-key cryptosystems currently in use. This would seriously compromise the confidentiality and integrity of digital communications on the Internet and elsewhere. The goal of post-quantum cryptography (also called quantum-resistant cryptography) is to develop cryptographic systems that are secure against both quantum and classical computers, and can interoperate with existing communications protocols and networks.

The question of when a large-scale quantum computer will be built is a complicated one. While in the past it was less clear that large quantum computers are a physical possibility, many scientists now believe it to be merely a significant engineering challenge. Some engineers even predict that within the next twenty or so years sufficiently large quantum computers will be built to break essentially all public key schemes currently in use. Historically, it has taken almost two decades to deploy our modern public key cryptography infrastructure. Therefore, regardless of whether we can estimate the exact time of the arrival of the quantum computing era, we must begin now to prepare our information security systems to be able to resist quantum computing.

NIST Initiative

POST-Quantum Cryptography (PQC) is an answer to a threat coming from a full-scale quantum computer able to execute Shor’s algorithm. With this algorithm executed on a quantum computer, currently used public keyschemes, such as RSA and elliptic curve cryptosystems,are no longer secure. The U.S. NIST made a step toward mitigating the risk of quantum attacks, by announcing the PQC standardization process. In June 2020, NIST published a list of candidates qualified to the third (final) round of thePQC process. To date, hardware performance of Round1 candidates was reported for only a small percentage of allsubmissions.

Candidate Algorithms

Researchers are developing cryptographic algorithms to resist attacks by classical and quantum computers. The major classes of post quantum cryptography algorithms are:

Lattice-based cryptography algorithm

Lattice-based cryptography algorithms offer the best performance, but are the least conservative among all. Lattice cryptography builds on the hardness of the shortest vector problem (SVP) which entails approximating the minimal Euclidian length of a lattice vector. Even with a quantum computer SVP is shown to be polynomial in n. Other lattice cryptography algorithms are based on Short Integer Solutions (SIS). These are secure in the average case if the SVP is hard in the worst-case.

Code-based cryptography

Code-based cryptography uses error correcting codes and offers a conservative approach for public key encryption/key encapsulation, as it is based on a well-studied problem for over 4 decades. This class of algorithms use large keys and some attempts at reducing their key size have made these algorithms vulnerable to attacks. Researchers proposed techniques to reduce the key size without compromising the security.

Multivariate polynomial cryptography

Multivariate polynomial cryptography relies on the difficulty of solving the multivariate polynomial (MVP) algorithm over finite fields. Solutions of MVP problems are NP-hard over any field and NP-complete even if all equations are quadratic over GF(2). MVPs are preferred as signature schemes as they offer the shortest signatures. However, some schemes are broken.

Hash-based digital signatures

Hash-based digital signatures resist quantum-computer attacks. These schemes are based on the security properties of the underlying cryptographic hash functions (collision resistance and second pre-image resistance).

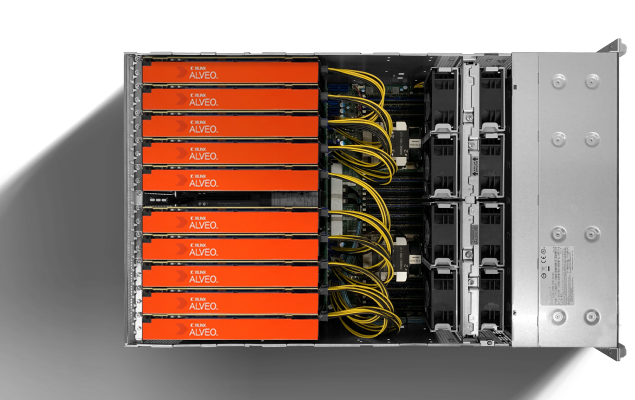

FPGA based Post Quantum Cryptography

Implementing PQC algorithms in hardware involves a unique set of challenges. Some of these challenges are specific to the current state of standardization, in which a large number of candidates still remain, and the primary goal of the implementation is a fair evaluation of submissions to the NIST standardization process. Other challenges will remain in place even after the first PQC standards are published.

At this point, one of the biggest challenges seems to be the mathematical complexity of the specifications, which are often written by cryptographers, concerned primarily with demonstrating the security of their schemes, rather than the ease of their implementation. Understanding these specifications often requires a solid background in number theory, abstract algebra, coding theory, and other related disciplines. Hardware implementers, with a classical background in computer engineering, are often illequipped with understanding these specifications, which are frequently riddled with complex formulas and highlevel mathematical operations that need to be “deciphered” by reading and understanding the corresponding C source code. Clearly, a reference implementation in C, although helpful and potentially suitable as an input to high-level synthesis (HLS) tools, may also hide a lot of potential optimizations, possible only in hardware, and accomplished by performing a given high-level operation using a different low-level algorithm.

At the current stage of the standardization process, another major challenge is the fact that the remaining candidates belong to five different families: lattice-based, code-based, multivariate, symmetric-based, and isogeny-based. Each of these families has several subfamilies. For example, lattice-based candidates are divided into schemes with structured (a.k.a. random) and unstructured (a.k.a. ideal) lattices. Similarly, code-based schemes have been classified by NIST into three subfamilies: Algebraic, Short Hamming (a.k.a Quasi-Cyclic), and Low Rank. Each family or even subfamily requires a different mathematical background, involves a different set of basic operations, and poses its own set of optimization challenges.

In general, basic operations of PQC schemes are very different from those used by traditional public-key schemes, such as RSA or ECC. In particular, the operations on large integers (such as modular multiplication modulo a large prime or a product of two large primes), determining the complexity of the majority of current public-key cryptography standards, remain relevant in only one out of five major PQC families, isogeny-based cryptography. Some of the new operations, found in various PQC schemes, offer major advantages, such as higher potential for parallelization, ability to use optimization methods developed as part of different branches of science and engineering (such as Fast Fourier Transform, typically associated with digital signal and image processing), or suitability for very compact implementations. Others pose new challenges, such as sequential nature, large memory requirements, or variable execution time.

Some of the underlying operations might have never been implemented in hardware before. For example, codes used in code-based cryptography are typically different from those commonly used to achieve the error-free communication. As a result, there may have never been a strong incentive to implement the corresponding coding and decoding algorithms in hardware. The in-depth knowledge of coding theory required for optimized implementations of these algorithms has been for years beyond the scope of traditional cryptographic engineering, computer arithmetic, or even computer engineering. Additionally, in other branches of science and engineering, there is no tradition of making the code of best implementations available as open-source, which has been a major factor driving improvements in cryptographic engineering. The number of publications on hardware implementations of candidates for PQC standards is still relatively small, and the reported results very difficult to compare due to divergent assumptions and optimization targets. Consequently, little is known about an optimal way of implementing these schemes in hardware, targeting various optimization criteria, such as minimum area, power, and energy per bit; maximum speed; and minimum product of latency times area.

An additional challenge is that a significant percentage of candidates submitted to Round 1 of the NIST standardization process has been partially broken. These breakthroughs discouraged any early attempts at hardware and optimized software implementations. The common perception was that such implementations were premature and involved considerable risk. The worst-case scenario involved a major implementation and optimization effort, followed by an inability to publish results because, in the meantime, the target of the investigation was shown vulnerable to powerful attacks, and hence deemed unsuitable for future standardization. Due to the mathematical and algorithmic complexity of the PQC algorithms, and the limited amount of previous work, the workload for even a single algorithm, implemented using the traditional RTL approach, can easily reach several manmonths. Close collaboration with the algorithm designers is highly recommended and often indispensable.